Mutual information

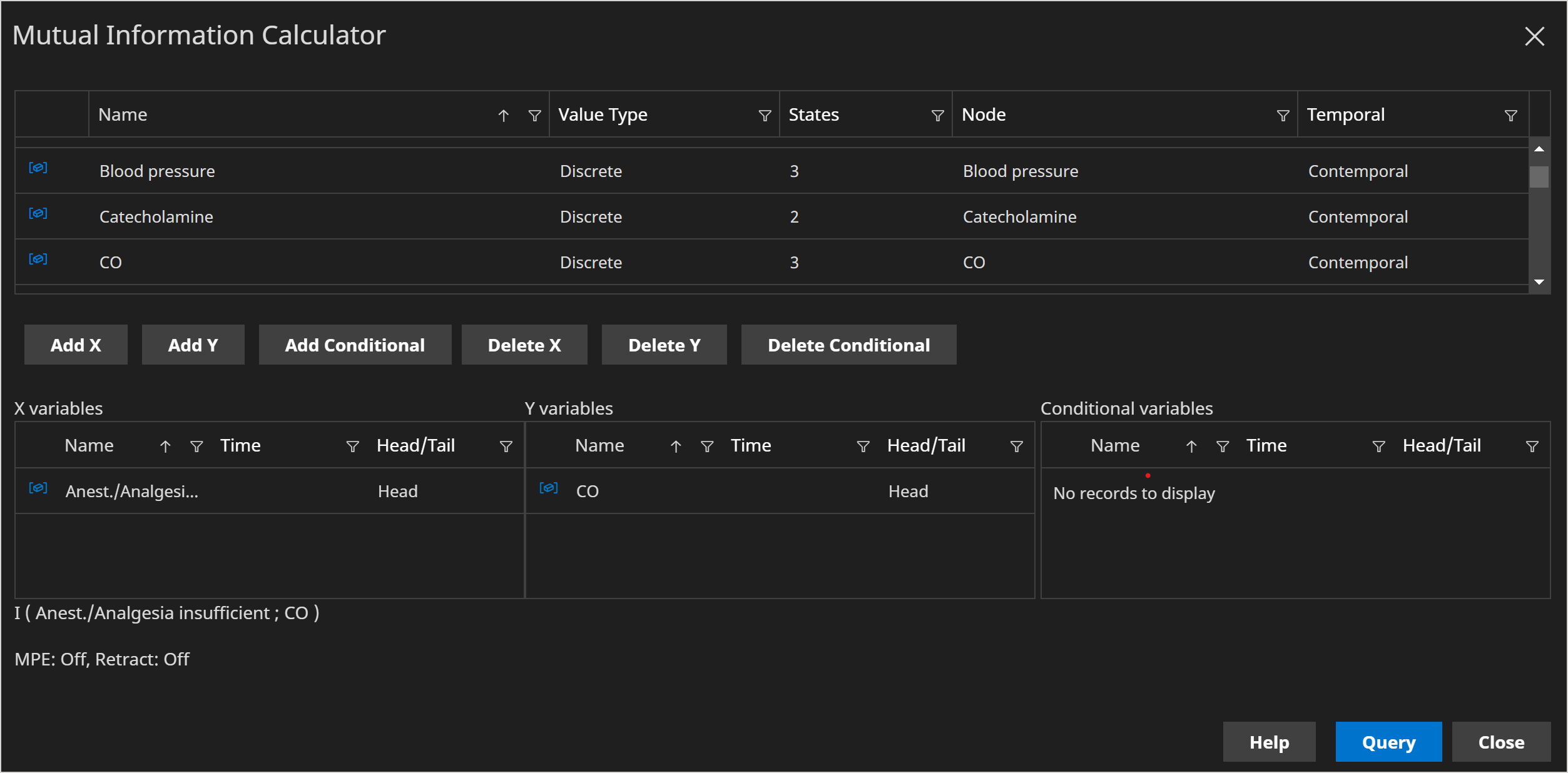

User Interface since version 11.0

The mutual information (MI) between two variables is a measure of the dependence between them. It quantifies the amount of information that can be gained from one variable through the other.

The expression I(X;Y) is used to denote the mutual information between variables X and Y. X and/or Y can also represent groups of variables.

The expression I(X;Y|Z) is used to denote the conditional mutual information between X and Y given Z. Again X,Y or Z can be groups of variables.

info

A value of zero indicates no dependence between X and Y.