Kullback Leibler divergence

Since version 7.16 User Interface since version 11.0

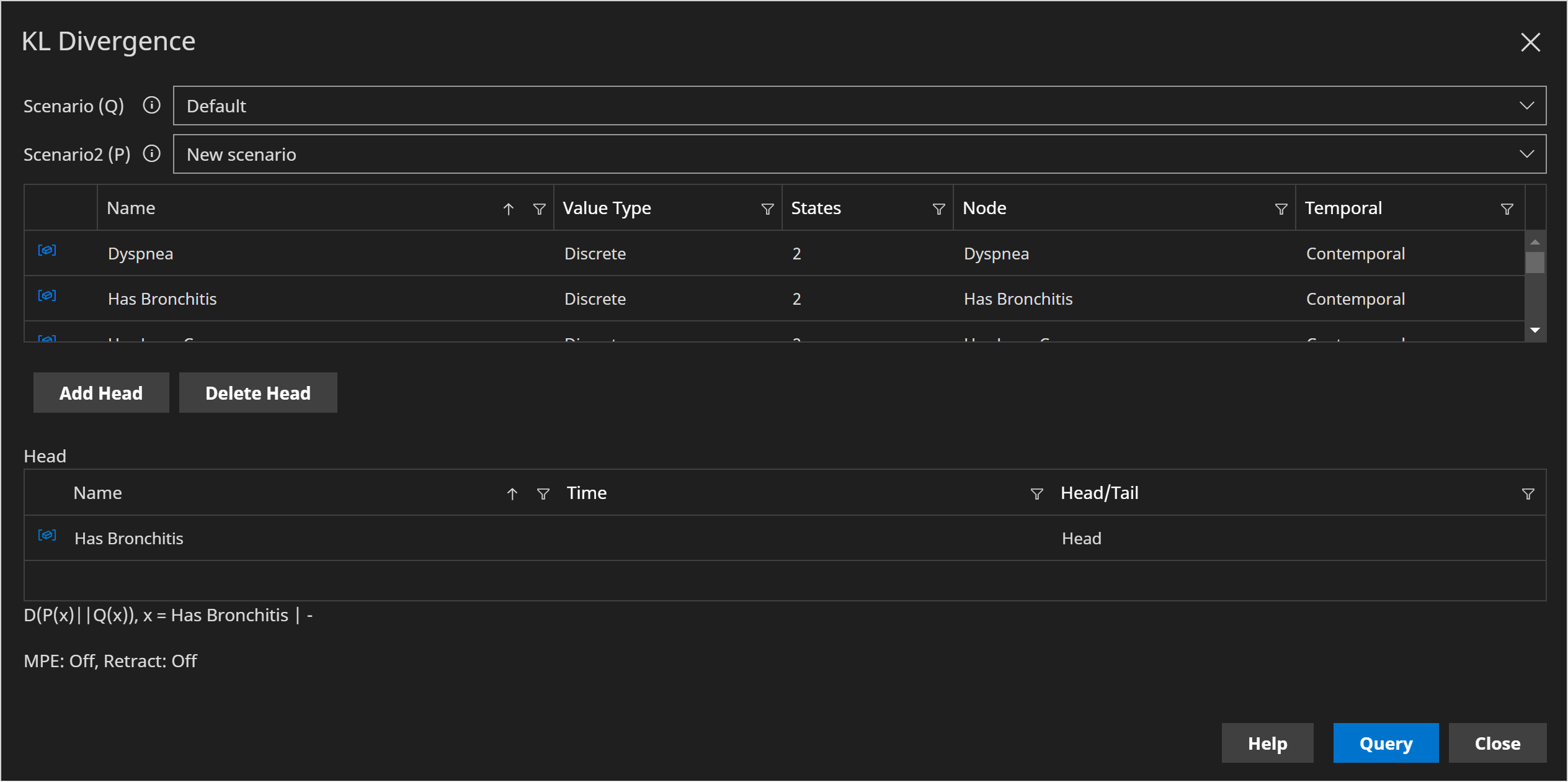

The Kullback-Leibler divergence (KL Divergence) is an information theoretic value which quantifies the difference between two distributions.

The divergence between a distribution Q(x) and P(x) is denoted D(P||Q) or D(P(x)||Q(x)).

info

KL Divergence is not a metric as D(P||Q) != D(Q||P).

info

KL divergence can be used in many settings, but when used in a Bayesian setting, Q(x) is the prior and P(x) is the posterior, and it quantifies the change in distribution/model having made an observation.