Value of information

Value of Information (VOI) is a tool for determining which variables are most likely to reduce the uncertainty in a variable of interest.

NOTE

For Decision Graphs, this can also take costs into account.

The uncertainty (measured using Entropy for discrete variables), tells us how unsure we currently are about the state of a variable. For example, if a variable X has states False and True, and X is currently False with probability 0.6 and True with probability 0.4, this is much less certain than if X is False with probability 0.1 and True with probability 0.9.

The goal of Value of Information is to decrease this uncertainty, by telling us which other variables, if we know their state, are most likely to do so.

Value of information can be used to build troubleshooting applications. The variables which are most likely to reduce the uncertainty, are the questions the system should ask first.

Opening the Value of Information window

The Value of Information window can be accessed by clicking the Value of Information button on the Analysis ribbon tab.

Calculating Value of Information

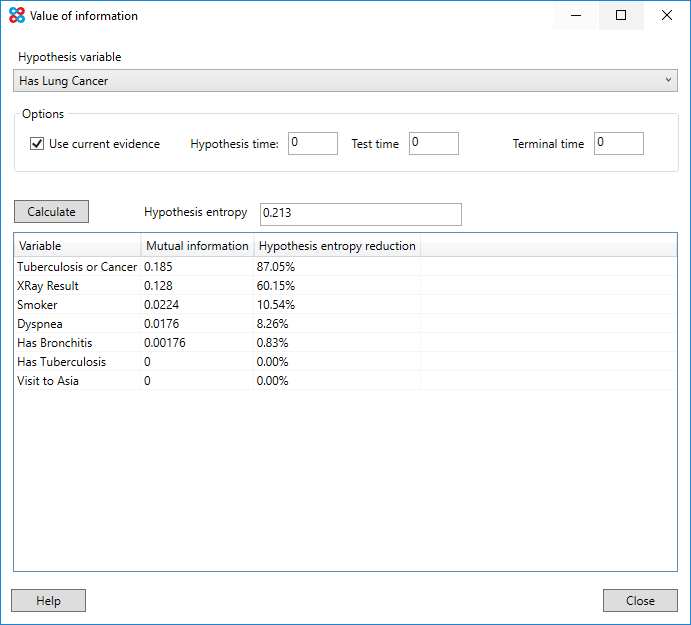

Select the Hypothesis variable. This is the variable whose uncertainty you want to reduce, for example the target of a troubleshooting system.

The Use current evidence check-box, when true, ensures that the calculations take into account any evidence currently set.

Click the Calculate button to perform the calculations. The Hypothesis entropy text box displays the current level of uncertainty. And the table shows how other variables are likely to reduce that uncertainty in decreasing order.

NOTE

The most useful column is Hypothesis entropy reduction, which tells us the percentage by which the uncertainty metric is reduced.

Time series

There are a number of options which affect how the calculations are performed wth time series. These are explained below.

Consider a hypothesis variable H and a test variable T.

Hypothesis time

The Hypothesis time is the time (t) associated with the Hypothesis variable during the calculations.

NOTE

The mutual information I(H[time = t];T) is calculated for H at time t. (Note that T could also have a time, if it is a temporal variable).

Test time

The Test time is the time (t) associated with the Test variable during the calculations.

NOTE

The mutual information I(H;T[time=t]) is calculated for T at time t. (Note that H could also have a time, if it is a temporal variable).

Terminal time

If a time series model has any terminal nodes, a terminal time (absolute, zero based) is required. The terminal time determines the point at which Terminal nodes link to temporal nodes.