Entropy

The Entropy (or Shannon entropy) of a distribution is a measure of its uncertainty.

NOTE

A value of zero indicates an outcome that is certain. For example a distribution with evidence set on its variable(s).

The expression H(X) is used to denote the entropy of a variable X. X can also represent groups of variables.

The expression H(X|Z) is used to denote the conditional entropy of X given Z. Again X or Z can be groups of variables.

Support

| Variable types | Multi-variate | Conditional | Notes |

|---|---|---|---|

| Discrete | Yes | Yes | Multiple & conditional since 7.12 |

| Continuous | Yes | Yes | Since 7.12 |

| Hybrid | Yes | Yes | Approximate, since 7.16 |

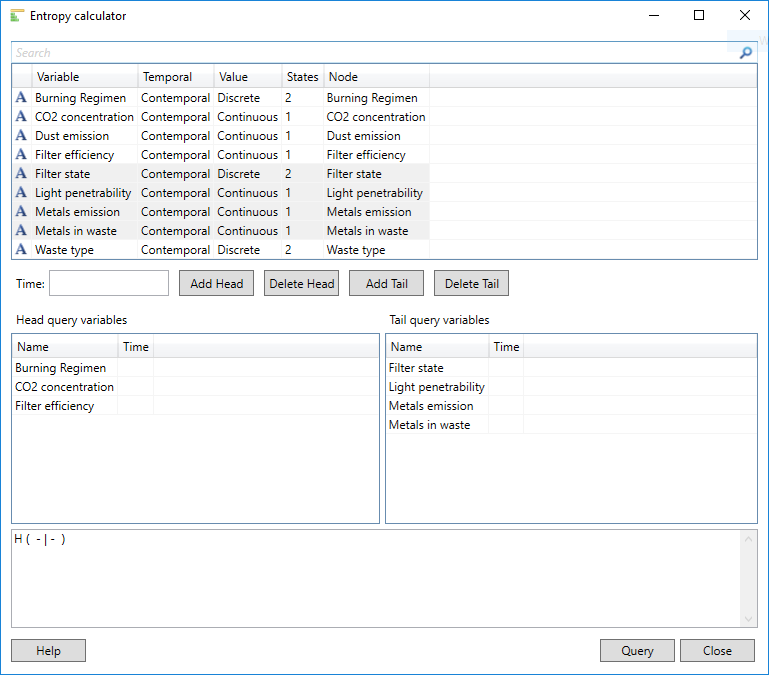

Entropy calculator

Since version 7.16

The entropy calculator is available from the Analysis tab in the user interface, and can used to calculate entropy of a variable or variables X, and optionally can be conditioned on a variable or variables Z.

Entropy can be calculated in BITS (base 2) or NATS (base E).

NOTE

Typically Entropy involving continuous variables is reported in NATS. This is due to Gaussians belonging to the exponential family of distributions.